Back in secondary school I was fascinated by the concept of ray tracing. At first I thought it was the ultimate rendering algorithm - that photographic-quality computer-generated pictures would be possible just as soon as we managed to accurately model the way light reflected or was refracted by the surfaces we wanted to visualize.

Then it occurred to me that maybe shooting rays from the eye back to the light source was not necessarily the best way to go about rendering objects. What if we shot rays from the light sources to the eye instead? The trouble with that approach, I quickly realized, was that most light rays are absorbed by other surfaces before reaching the eye, so you would have to shoot an awful lot of photons to get anything approximating an image.

The next step in this mental process was to imagine combining forward and reverse ray tracing - shoot rays from the light source and bounce them around a bit to figure out where the light ended up in the scene, and then shoot rays from the eye into the scene (using the normal ray-tracing algorithm) to actually render the image, using the first set of rays to figure out which parts were lit up, which were in shadow and so on. Unfortunately I never got around to implementing this idea.

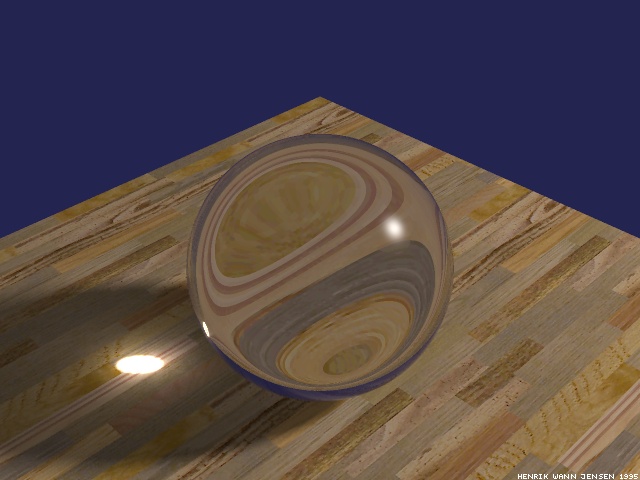

Turns out that someone beat me to it anyway - Henrik Wann Jensen had already invented photon mapping some years before and rendered some beautiful images of Cornell boxes, caustics and architectural models. All the good ideas have already been thought of...

If computers continue to get more powerful at the rate they have been, in 10-20 years it should be possible to render photon mapped images and model the subsurface scattering needed to render translucent objects (like people) all in real time. By then we will all have high dynamic range displays (I saw one of the BrightSide displays at an MS research techfest earlier this year - it was awesome) and will have sophisticated procedural geometry algorithms for rendering all sorts of complex natural objects. These technologies (especially combined) will be able to generate some truly awesome images, increasingly difficult to distinguish from real life.

The future of computer graphics looks good!

[...] will soon become unnecessary as the dynamic range that monitors can display increases. As I’ve mentioned before I’ve seen this technology in action and it’s seriously impressive - the pictures are [...]