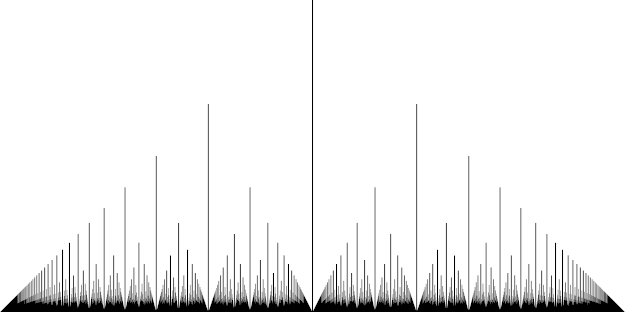

I saw this interesting visualization of Atari 2600 binaries. It makes me want to do something similar, but arrange the instructions according to the position of the raster beam when the instruction is executed rather than the position in the ROM. The 2600 is a unique platform in that it lacks a frame buffer, so to produce coherent images, the code must be synchronized with the raster beam. If we make an image that has 4 horizontal pixels per color carrier cycle, that gives an 912 pixel wide image. There are 76 CPU clock cycles per raster line and instructions take 2-7 cycles giving us 24-84 horizontal pixels per instruction, which (with a bit of squishing) ought to be enough. A raster line would have to correspond to a line of text so we probably wouldn't want to show a full frame (field). However, a typical game will only have a few different "line sequences" so a full frame image would be very repetitive anyway.

CPU usage visualization

September 5th, 2011How do we get there from here?

September 4th, 2011So we have some ideas about how we want the world to look - the next question is "How do we get there from here?" It seems to be very difficult to get anything changed at least in US politics because there are so many entrenched interests, but here's the best idea I've had about it so far.

We use this fantastic information transfer medium of the internet to get as many people interested, involved and well informed as possible. We get these people to vote in on-line elections (that are at least to begin with unofficial, non-binding and informal but are as secure as possible and only open to registered, authenticated voters). We then try to persuade politicians to take these polls into account (as well as what they suppose the opinions of the rest of the electorate to be) when making their decisions. Participating in this system costs the politician nothing at first (since when they disagree with what the poll says they can say "oh that's just the opinion of a small minority of people, most people have the opposite opinion"), but as more and more people participate in these polls they eventually become impossible to ignore ("it's the will of the people"). When politicians vote against the will of the people, we call them out on it and hopefully get them voted out of office in the next election. Once the system has sufficient momentum, we start to field candidates who run on a platform of voting according to the results of these polls rather than their own opinions. Then eventually we can transition away from having elected politicians at all and just have a system of direct delegated democracy so that the people can vote (directly or by proxy) on every piece of proposed legislation. This is much less susceptible to corruption by corporations, because decisions are not made by wealthy minority.

In the meantime, we have to do something about the media. It's no good having a democracy if people are voting against their own interests and blindly following the instructions of corporate mouthpieces. I think this is more of a US problem than a UK one the BBC is much more impartial than private media can be. Here in the US there are massive numbers of people who get all their information from Fox News and conservative talk radio which are really just fronts for organizations like Koch Industries. This is how we get public support for absurd wars and other policies that are disastrous for almost all of the people who are voting for them. The usual method we use as a society for determining which side of an argument is true is the judicial system, so I'm wondering if we can somehow make news organizations liable for things that are not true that they present as news. Don't make the penalty too big because sometimes mistakes happen but make it large enough so that the likes of Fox can't continue their current scam. And if that puts too much power in the hands of judges, then we'll need some entirely new system of checks and balances to prevent abuse there. I guess to avoid stepping on the first amendment there would have to be some kind of voluntary labeling scheme for news organizations, and we would have to learn to take with rather more salt news from sources which don't stand by what they say by participating in this scheme.

We still need to keep the economy growing as fast as possible. Unlike the conservatives, I don't think the way of doing this is reducing taxes on the rich and reducing services on the poor. I think we need more small businesses, and that there are a lot of impediments preventing people from setting up or taking over small businesses. These impediments need to be identified and removed. More small businesses means more competition for large corporations. In the US, creating a functional public healthcare system would be a great benefit for small businesses (companies in the US are can't attract the best employees without providing health insurance plans, which is much more expensive for small companies than for big ones).

Hacker show and tell

September 3rd, 2011As CodeSourcery employees work from home, we get together for a week-long meeting once a year in order to better understand each other. CodeSourcery was bought by Mentor Graphics last year, but the tradition continues for the division.

One of my favorite parts of the annual meeting is getting together with extremely talented people and having fascinating discussions about all sorts of technical things. Sometimes these are work related and sometimes we show each other our personal programming projects (the same sorts of things I talk about in this blog).

I often think it would be great to be able to have these kinds of in-person discussions more often than once a year. I've thought about setting up some kind of regular meetup in Seattle for just this kind of thing. I found Hack and Tell which seems to be exactly what I'm looking for except for the fact that it's in New York, so perhaps I should try to set up a Seattle chapter.

I also found a few things in Seattle which are similar but weren't quite what I was looking for one way or another:

- Dorkbot seems be more "electronic art" centric (although I haven't been to one of their meetings).

- Ignite Seattle seems to have a more formal format - 5 minute talks.

- Saturday House and Meet at the Pig seem to be inactive.

- Seattle Wireless HackNight at Metrix Create:Space - I actually went to this for a couple of months earlier this year. It's a very cool space and the people there seemed interesting, but the focus seemed to be more on having a set place and time to do some hacking and less on showing off personal projects to each other. I stopped going after a while partly because it didn't seem to be quite what I was looking for but mostly because it was in the evening which is problematic when you have two children who wake up you up at 7am sharp (at the latest) every day - if I don't go to bed at a sensible time I tend to be a bit of a zombie the next day.

Euclid's orchard improved image

September 2nd, 2011I wasn't very impressed with the Euclid's Orchard perspective picture on Wikipedia (grey background, limited number of "trees", no anti-aliasing) so I made my own:

Postel's law revisited

September 1st, 2011There is a general principle in computing called the Robustness principle or Postel's law, which says that you should be conservative in what you send but liberal in what you accept.

This seems like a no-brainer, but adhering to the principle does have some disadvantages. Being liberal in what you accept introduces extra complexity into software. More problematically, being liberal in what you accept allows others to be less conservative in what they send. Nowhere is this more noticable than HTML. The first web browsers were extremely liberal in what they accepted - allowing HTML that is broken in all sorts of different ways. Many people wrote HTML by hand, tested it on just one browser and then uploaded it. Other browsers would often mis-render the broken HTML, leading people to put little buttons with slogans like "best viewed in Netscape 4" on their pages. As HTML evolved, continuing to accept all this misformed HTML while adding new features became a big headache for browser developers.

Lately, the best practices involve marking your HTML in such a way that browsers will only accept it if it's correct - that way you find out quickly about any mistakes and fix them early.

In general, I think that new protocols should now be designed to have a very simple canonical form and that only data that adheres to this form should be accepted - other data should be rejected as early as possible.

Inputs directly from users can still be interpreted liberally just because it makes computers easier to use, but that data should be transformed as early as possible into canonical form.

Progressively optimizing compiler

August 31st, 2011Normally when compiling a program, you tell the compiler how much optimization you want and what the input files are, it goes off and does its thing and comes back when it's done. Sometimes I think it might be nice if one instead told the compiler how much time to spend doing the compiling. It would then do the absolute minimum to make a working binary, and then gradually do more and more optimizations until the timer ran out. This would make the time it takes to rebuild things much more predictable. One downside is that the performance of the resulting program would depend on unpredictable things like how busy the build machine was. Another is that it's probably more efficient for a compiler to decide upfront what the optimizations should be rather than making lots of intermediate binaries of different optimization levels.

However, this sort of thing might be more useful as a run-time process - i.e. a JIT compiler. The system can monitor which bits of the code are being run most often (this is very easy to do - just interrupt at a random time and see what code is being run) and concentrate optimization efforts on those parts. The compilation can continue (gradually making the program faster and faster) until the point of diminishing returns is reached. I understand there's a Java Virtual Machine which can do this.

Fractals on the hyperbolic plane

August 30th, 2011Some amazing images have been made of fractal sets on the complex plane, but I don't think I've ever seen one which uses hyperbolic space in a clever way. I'm not counting hyperbolic tessellations here because the Euclidean analogue is not a fractal at all - it's just a repeated tiling.

The hyperbolic plane is particularly interesting because it is in some sense "bigger" than the Euclidean plane - you can tile the hyperbolic plane with regular heptagons for example. Now, you could just take a fractal defined in the complex plane and map it to the hyperbolic plane somehow, but that doesn't take advantage of any of the interesting structure that the hyperbolic plane has. It's also locally flat, so doesn't add anything new. If you use some orbit function that is more natural in the hyperbolic plane, I think something much more interesting could result. I may have to play about with this a bit.

Similarly, one could also do fractals on the surface of a sphere (a positively curved space - the hyperbolic plane is negatively curved and the Euclidean plane has zero curvature).

The slow intelligence explosion

August 29th, 2011Each new technology that we invent has improved our ability to create the next generation of technologies. Assuming the relationship is a simple proportional one, our progress can be modelled as for some measure of "intelligence" or computational power

. This differential equation has solution

- exponential growth, which matches closely what we see with Moore's law.

The concept of a technological singularity is a fascinating one. The idea is that eventually we will create a computer with a level of intelligence greater than that of a human being, which will quickly invent an even cleverer computer and so on. Suppose an AI of cleverness can implement an AI of cleverness

in time

. Then the equation of progress becomes

which has the solution

. But that means that at time

we get infinite computational power and infinite progress, at which point all predictions break down - it's impossible to predict anything about what will happen post-singularity from any pre-singularity time.

Assuming human technology reaches a singularity at some point in the future, every human being alive at that time will have a decision to make - will you augment and accelerate your brain with the ever-advancing technology, or leave it alone? Paradoxically, augmentation is actually the more conservative choice - if your subjective experience is being accelerated at the same rate as normal progress, what you experience is just the "normal" exponential increase in technology - you never actually get to experience the singularity because it's always infinitely far away in subjective time. If you leave your brain in its normal biological state, you get to experience the singularity in a finite amount of time. That seems like it's the more radical, scary and dangerous option. You might just die at some point immediately before the singularity as intelligences which make your own seem like that of an ant decide that they have better uses for the atoms of which you are made. Or maybe they'll decide to preserve you but you'll have to live in a universe with very different rules - rules which you might never be able to understand.

The other interesting thing about this decision is that if you do decide to be augmented, you can always change your mind at any point and stop further acceleration, at which point you'll become one of those for whom the singularity washes over them instead of one of those who are surfing the wave of progress. But going the other way is only possible until the singularity hits - then it's too late.

Of course, all this assumes that the singularity happens according to the mathematical prediction. But that seems rather unlikely to me. The best evidence we have so far strongly suggests that there are physical limits to how much computation you can do in finite time, which means that will level off at some point and progress will drop to zero. Or maybe growth will ultimately end up being polynomial - this may be a better fit to our physical universe where in time

we can access

computational elements.

To me, a particularly likely scenario seems to be that, given intelligence it always takes the same amount of time to reach

- i.e. we'll just keep on progressing exponentially as we have been doing. I don't think there's any reason to suppose that putting a human-level AI to work on the next generation of technology would make it happen any faster than putting one more human on the task. Even if the "aha moments" which currently require human ingenuity are automated, there are plenty of very time-consuming steps which are required to double the level of CPU performance, such as building new fabrication facilities and machines to make the next generation of ICs. Sure, this process becomes more and more automated each time but it also gets more and more difficult as there are more problems that need to be solved to make the things work at all.

In any case, I think there are number of milestones still to pass before there is any chance we could get to a singularity:

- A computer which thinks like a human brain albeit at a much slower rate.

- A computer which is at least as smart as a human brain and at least as fast.

- The development of an AI which can replace itself with smarter AI of its own design without human intervention.

Debugger feature

August 28th, 2011Here is a debugger feature that I think I would find really useful: avoid stopping at any given location more than once. This would be particularly useful when finding my around an unfamiliar bit of code - it would step into a function that I hadn't stepped into before, and then step over it the next time it was called, for example. In fact, I often find myself trying to do exactly this, just remembering which functions I've stepped into before (and often getting it wrong).

The other use case for this feature would be doing a sanity test once some new code has been written - the debugger would just stop on the new/changed lines of code so that you can make sure that they're working as expected.

One difficult part about this feature (especially for the second use case) would be keeping track of which locations have been stopped at even across recompiles (so the instruction pointer location won't necessarily be the same) and across source changes (so the source line location won't necessarily be the same either). So really to implement this properly we need to completely overhaul the entire compilation system so that it takes as input the deltas from the last compilation and performs the corresponding modifications to the output files (including both the binary and the list of which locations have been visited).

Such an overhaul would be extremely difficult, but would be useful for other reasons as well - in particular, it has the potential to make builds much faster, which shortens the edit/recompile/test sequence and makes programming much more pleasant. Also, it could integrate with editor syntax highlighting and code completion features, so that these always reflect the current state of the program.

The logic of the minimum wage

August 27th, 2011I've said before that I would prefer to replace the minimum wage with a guaranteed minimum income, but I've since thought of a justification for keeping the minimum wage.

In any economy, there are commodities - things which are easily available and the prices of which are easy to discover such as gold, oil or milk. One very important commodity is the hourly labour of a human who is healthy and competent at following simple instructions but otherwise unskilled. Even without a minimum wage law, there will be a natural price for such labour. It will fluctuate with time and space but will generally stay within a certain range. In some times and places it might actually go higher than the legislated minimum wage (which corresponds to a state of zero unemployment).

Having a legislated minimum wage has the effect of setting a scale factor (or a "gauge", for the physicists) of the economy as a whole - it's sort of like having an electrical circuit that isn't connected to anything and then connecting one part of it to ground. If the minimum wage is set too high then it will cause an inflationary pressure which will dissipate once everyone has a job again. If it's set too low then it will have a negligible effect anyway, since there would be very few people who would be unable to get a job for more than minimum wage. According to this theory, the minimum wage has nothing to do with making sure the poorest members of society are paid a respectable wage (which makes sense since a minimum wage is actually a regressive policy) - it's just an economic control factor.

Now, as an economic control factor, a minimum wage has a number of problems. One is that it takes a while for the inflation to eliminate unemployment after the market rate for labour goes down, so there's always some residual unemployment. Another is that people are not all equal - the labour of some people is just naturally below the basic labour rate (because they are unskilled and also either unhealthy or incompetent). While this is unfortunate, essentially forbidding them from working at all (by preventing employers from hiring them at the market rate) seems to add insult to injury (not to mention creating yet another dreaded poverty trap). A third problem is that there are many other ways that governments "ground" economies - in the electrical circuit analogy they're connecting various bits of a circuit to voltage sources that correspond to "what we think this ought to be" rather than what it actually is, which seems like a good way to get short circuits.