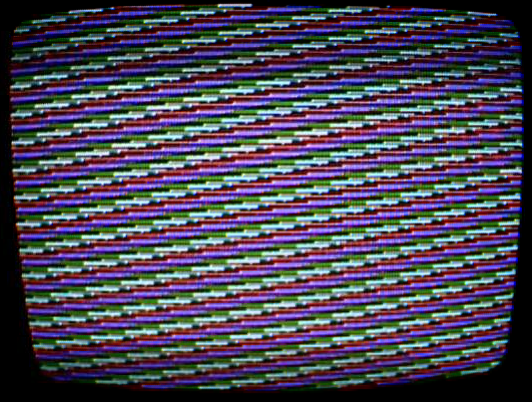

Once I had achieved CGA lockstep, I tried some test programs. This image was made by cycling through the possible palette registers as quickly as possible (i.e. it's running a big unrolled loop of "INC AX; OUT DX,AL" to the palette register):

That worked great, except that in making it I noticed that the pattern wasn't always starting the same way - half the time the first visible scanline had different set of colours. Somehow a bit of state was leaking through my lockstep routine!

After a while I figured out that it was due to the way I was getting the CRTC into lockstep with the CGA and CPU. The smallest frame that the 6845 CRTC can do is two character clocks (1 character by 2 scanlines - a 1 scanline high frame doesn't work with that CRTC). I thought I could get around this by going into high-res mode - then 1 character clock is 1 hchar so a frame would be 1 lchar and we'd be in a known place in the frame once we were in a known place in the CGA cycle.

Have you spotted the problem yet? The problem is that I don't know what the phase relationship is between the CGA clock and the CRTC clock - the first hchar of the frame could be the left or the right hchar within the lchar! And in fact, which it turned out to be was decided at random on startup.

With a bit of fiddling I eventually came up with a way to get the CRTC into lockstep as well. The trick hinges on the fact that if we set up the CRTC parameters so that one of the scanlines is displaying a normal visible image and one is overscan, we can tell which scanline is which by reading the display enable bit of the CGA status register. Then we delay an odd number of lchars if the display enable bit is set one way and an even number of lchars if it's set the other way (it doesn't matter which is which). Because we want to keep the CGA and CPU in lockstep as well, the difference in the codepath lengths must also be a multiple of 3 lchars, so delaying for X lchars one way and X+3 the other works fine.

That's about all there is to it. The full lockstep routine is on github. Once lockstep is entered it'll persist until you wait for a time that depends on an external event (such as reading from disk/serial/parallel/ethernet/joystick or waiting for a keystroke). That doesn't mean that lockstep mode games and trackmos are impossible, though. The keyboard can be read by polling (pretty much all PC software directly or indirectly uses an interrupt for keyboard access but it isn't compulsary and I've done it by polling a few times). You just have to make sure the code paths are the same length no matter whether a key was pressed or not and no matter which key was pressed if there is one, which can be done by adding suitable delays. Disk access is a bit more difficult, since there's going to be a DMA bus access at some unpredictable point, and after it's happened you'll be out of lockstep. I think the solution is to HLT after the disk access is complete and restart execution on a timer interrupt. In the event that lockstep between CGA and PIT isn't possible, regaining lockstep once the timer interrupt has occurred should be possible by delaying for N ccycles for some N between 0 and 15 and a CGA memory access. Another possible way is to make sure the CPU is running code that is either:

- BIU-bound with no wait states, or

- that is EU-bound and never exhausts the prefetch queue

for the entire time that the accesses might be happening. That way the time taken to run the code doesn't depend on exactly when the accesses occur.